Table of contents

Most monitoring tools weren't built for the AI-first world.

By nature, traditional monitoring platforms force you out of your natural coding environment and trap you in clunky web interfaces, brittle configuration panels, and rigid APIs. And sadly, when monitoring providers do offer "AI features," it's usually a chatbot bolted onto their existing UI, being nothing more than a pale imitation of the AI tools you’re reading about every day on Hacker News.

All this creates friction. You code in your IDE, then context-switch to a completely different environment only to set up your essential application monitoring. And it's slow, it's frustrating, and it breaks your flow.

However, you might have noticed it; it’s the year 2025, and AI-assisted coding isn’t a nice-to-have anymore. AI-powered development tools such as Cursor and GitHub Copilot have fundamentally changed how engineers work. How you work! You prototype faster, generate code in seconds, and build comprehensive test suites, all from within your IDE using the newest and hottest programming language out there: plain English.

Shouldn’t these AI-first principles apply to application monitoring, too? Of course, they should.

At Checkly, we’re ready for all your LLM agents to configure, test, and even deploy (if you want to) your monitoring infrastructure, thanks to “Monitoring as Code” (MaC).

How did we get there? We at Checkly were, by definition, always AI-ready!

Built for AI workflows from day one

Checkly is a monitoring and reliability platform delivered as code.

Your checks, alerts, environments, and configuration all live in your repository alongside your application code. And while you can control your monitoring setup via our web interface, we prefer (and actually recommend) that you define your monitoring infrastructure in code. Stay in your editor, version control your environment, and automate away all these cumbersome UI updates.

AI tools work with Checkly immediately. There are no plugins, no integrations, and no limitations. Open Cursor or Copilot and start describing what you want to monitor, and off you go!

This AI-first workflow is made possible because of “Monitoring as Code”. With MaC, engineers are able to:

- Create checks programmatically

- Define all monitoring resources as code.

- Test your future monitors in preview environments from the CLI.

- Deploy your application and the connected end-to-end monitoring at the same time.

And guess what? AI is more than capable of doing these tasks. All you have to do is provide enough context and teach it how to do it.

At Checkly, we offer multiple ways to enrich your LLM conversation with information that will give it monitoring super powers. Hand your agents the official docs or our custom LLM instructions, and off you go.

Then, your AI-powered IDE can:

Create monitoring checks in seconds. Point to the code powering your API and watch your favorite LLM generate a complete monitoring setup with proper assertions and error handling.

Modify monitoring at scale. Refactor and update alert thresholds or monitoring locations across dozens of checks automatically.

Iterate rapidly on complex user scenarios. Monitor critical user flows in real browsers by describing them in plain English, and let AI create end-to-end testing scripts for you.

Does this sound too good to be true? Let’s see things in action.

How teams use AI with Checkly

The following examples explain how we and our customers use AI with Checkly.

Note that the examples use the Cursor AI editor; however, every AI-assisted editor can create, update, test, or even deploy your monitoring infrastructure.

Scan your project and create monitoring checks

Let’s say you’ve just added a new API endpoint to your application and want to start monitoring it in production. You could now write all this monitoring code yourself, but that’s no fun! Let’s instruct AI to do the job for you.

If the application relies on a framework (which it most likely does), you’re in luck. Using Cursor’s @Docs command allows you to add required documentation to your AI conversation. The more context you provide, the better the LLM will understand what it’s dealing with.

To create your monitoring resources, we recommend also including the Checkly docs and our provided custom Checkly instructions for the best results.

In this case, Cursor will automatically figure out what API endpoint was added, ask follow-up questions, and create the required monitoring resources for you.

Thanks to the provided context (the Next.js docs, the Checkly docs and custom instructions) the Cursor agent will understand how to configure Checkly monitoring and create a new *.check.ts file resulting in a new API monitor when it’s deployed.

And because the LLM agent has read the docs and knows how to use the Checkly CLI, it will test the new monitoring and confirm that it works in the same conversation.

Honestly, this flow feels like magic! And if you're curious you can watch and follow this exact flow on YouTube.

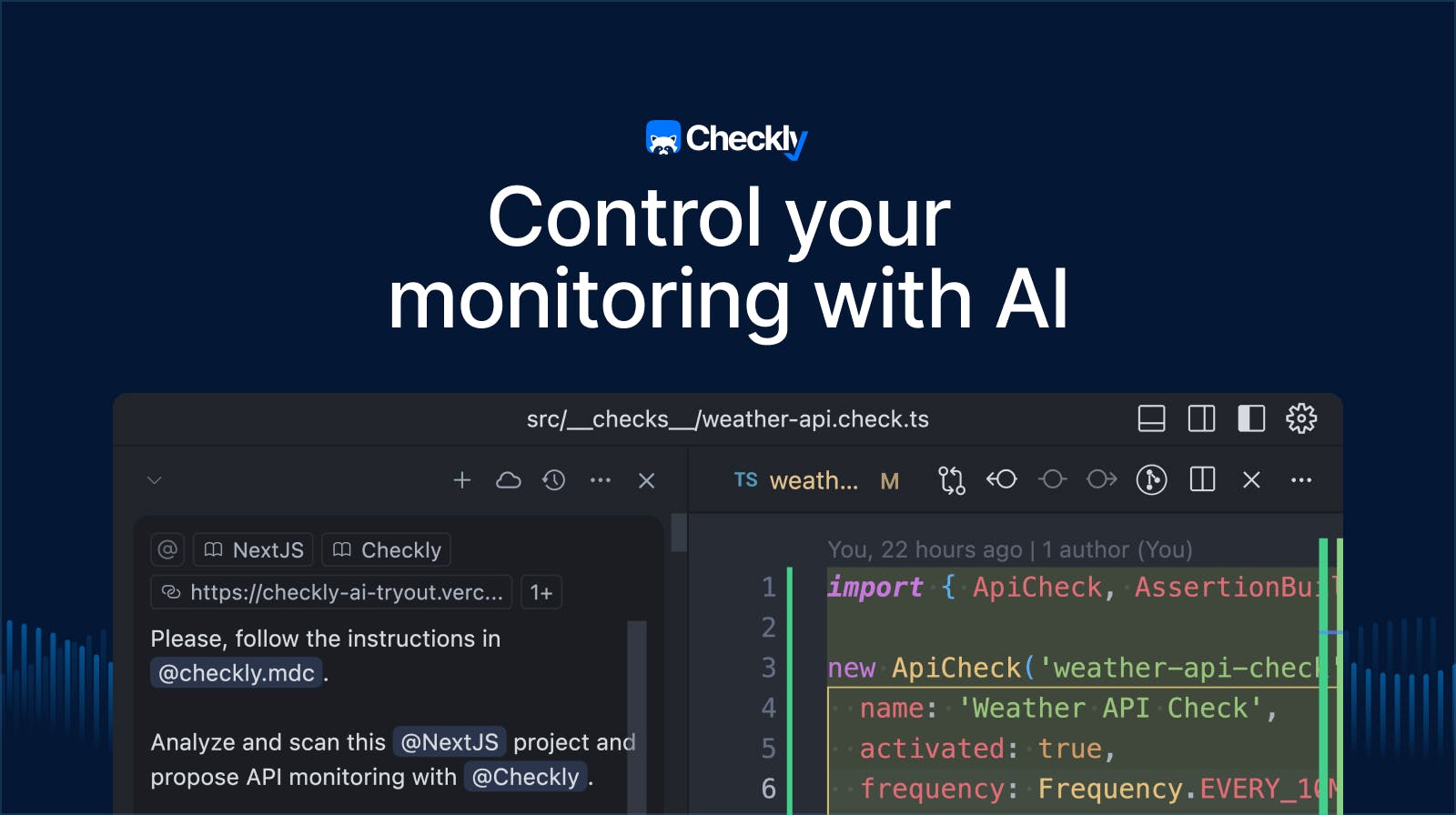

Please follow the instructions in @checkly.mdc.

Analyze and scan this @NextJS project and propose API monitoring with @Checkly.

The project is deployed at `@https://checkly-ai-tryout.vercel.app`.

Please ask me follow up questions if you're unsure about something.

When all questions are answered, please go ahead, set up the monitoring and verify/test that everything works.

Don't deploy the project. Only verify that the monitoring works.But that’s not all!

Modify your monitoring at scale

Once you start monitoring your project with Checkly, you’ll end up with config files for all your critical monitoring resources. Updating all these files can be cumbersome for a large-scale project.

However, here’s the catch: if all your monitoring configuration is stored in code, you can let AI do all the updates. Forget about updating multiple files manually or, god forbid, getting sore fingers by clicking the same buttons over and over again.

I can not stress this enough: extensive context is the secret ingredient here. If you add the Checkly docs and custom instructions to your LLM conversation, you can pour yourself a coffee and let the agent figure out what to do with your monitoring setup.

Describe and create end-to-end testing flows

I hope I have convinced you to hand over some monitoring configuration tasks to AI by now. Could you also instruct LLMs to test user flows with real browsers?

At Checkly, we’re big Playwright fans. The Microsoft project shines with a solid developer experience, regular releases, and smart ways to avoid test flakiness. It’s the best tool for end-to-end testing and synthetic monitoring.

Letting AI write monitoring configuration files is one thing, but how good is it at generating Playwright code?

Many people have tried automating UI flows using AI, and the results have always been disappointing. So far, we haven’t had a way to provide the LLMs the required context. The modern web is just too complex and complicated. We learned that telling it to test an application from memory only leads to hallucinated end-to-end testing chaos. Feeding application code was a better approach, but it still fell short of achieving good results.

To provide your AI conversation the required context to successfully create end-to-end tests, you need to let the LLM run a real browser, perform user actions, and receive page and application snapshots representing the current browser state.

Luckily, the Playwright team has listened to the community and released the Playwright MCP server to do just that. Thanks to Anthropic’s MCP protocol, you can now enable AI and let it control your machine’s browsers. Each instructed browser action then provides enough context for an LLM to generate Playwright tests based on real application state.

With this missing puzzle piece, we can instruct the agent to perform a browser user flow, and the conversation will include additional context, such as Playwright code, the current page state, and a page snapshot.

USER: Please navigate to checklyhq.com.

---

AI: Called `browser_navigate`:

```js

await page.goto('https://checklyhq.com');

```

// Additional context (page URL, page title and the page snapshot)

// ...

// ...

---

USER: Now please click on "Login".

---

AI: Called `browser_click`:

```js

await page.getByRole('link', { name: 'Login' }).click();

```

// Additional context (page URL, page title and the page snapshot)

// ...

// ...And I say it one last time: context is the secret sauce. If we can provide page snapshots now, it only needs a bit of prompt engineering to turn your favorite LLM into a Playwright test generator.

The AI can then take your instructions, run them in a real browser, and generate a Playwright test based on the resulting page context.

You are a Playwright test generator and an expert in TypeScript, Frontend development, and Playwright end-to-end testing.

- You are given a scenario and you need to generate a Playwright test for it.

- If you're asked to generate or create a Playwright test, use the tools provided by the Playwright MCP server to navigate the site and generate tests based on the current state and site snapshots.

- Do not generate tests based on assumptions.

- Use the Playwright MCP server to navigate and interact with sites.

- Access page snapshot before interacting with the page.

- Only after all steps are completed, emit a Playwright TypeScript test that uses @playwright/test based on message history.

- When you generate the test code in the 'tests' directory, ALWAYS follow Playwright best practices.

- When the test is generated, always test and verify the generated code using `npx playwright test` and fix it if there are any issues.Generating Playwright tests with AI is a huge topic by itself, but check out our YouTube channel if you want to see this flow in real life!

However, if you’ve successfully generated Playwright spec.ts files, that’s pretty much it. A Checkly monitoring project can either pick up your Playwright test directory, or if you want to, you can instruct the LLM to create new BrowserCheck constructs to have more control over your new Playwright monitors.

Read more about the Checkly CLI and Checkly constructs.

The advantages of an AI-ready workflow

These three examples were only the beginning of the Checkly AI journey!

Other development tools view AI-assisted IDEs as competition for screen time; they hope to build AI wrappers within their UIs that can replace Cursor, Copilot, and all their mighty AI-powered friends. However, this approach won’t work because it will result in unnatural UI patterns, such as proprietary chatbots, rigid scripting interfaces, or limited “AI query” features added to visual workflows.

With Checkly, your monitoring is already living in your repository, so you can take advantage of the latest and greatest AI models immediately. You don’t need to wait for a product release to start using AI. Your monitoring setup is “just” code. So, go ahead and open your favorite editor, describe what you want to monitor, and let the LLM write the check for you. You’ll be surprised how good it can be!