I’ll get right to the point: Not uniting testing and monitoring is costing you expensive engineering time, sales, and customer confidence.

Here are some quick facts:

💰 - The most expensive way to find a bug is to have your customers let you know

🤖 - Developer-first synthetic monitoring exists

🎥 - Your E2E tests should be running in production

Below you’ll find an all too familiar scenario that outlines the problems of traditional testing and monitoring approaches and what the benefits are of a united approach to testing and monitoring through monitoring as code (MaC).

Traditional testing and monitoring approach

It’s Friday, and Laura, one of your best devs, has just made a change to the universal button component because design systems are a thing and they’ve tested it thoroughly using component testing and e2e tests.

The entire test suite is passing quickly and without issue locally. That same code and those same tests are now making their way to running in staging and after that production.

After about an hour the changes have been merged into the production branch without any issues.

An hour later, we’re seeing reports coming in that customers can’t login or submit payments!

What happens next:

Reports come in. Someone in Ops has been alerted, a Slack or Microsoft Teams channel is spun up, partners from across the organization are digging into artifacts, Laura is answering questions about her changes, the team is considering a rollback, we’re looking at our services, and everyone is moving in different directions at the same time to figure out what’s going on.

Customer success is blowing up the #support channel because our enterprise partners can’t use the platform. Leadership across the organization is impacted and we’re sending our “we’re looking into this” default messaging.

Is it us or something else?

Eventually you find out that AWS had a partial outage mainly only impacting the US.

Everything winds down, the doomsday clock is set back to 10 until midnight, and the work goes on. If nothing changes, this will happen again.

The Impact:

- This exercise lasted over two hours

- Halted merging to production

- Impacted customer confidence

- Stressed everyone out

- Hourly cost of labor for 10 engineers involved w/ typical US salaries = $1800

- Loss of potential sales per hour = $10,000

Uniting Testing and Monitoring a MaC Workflow

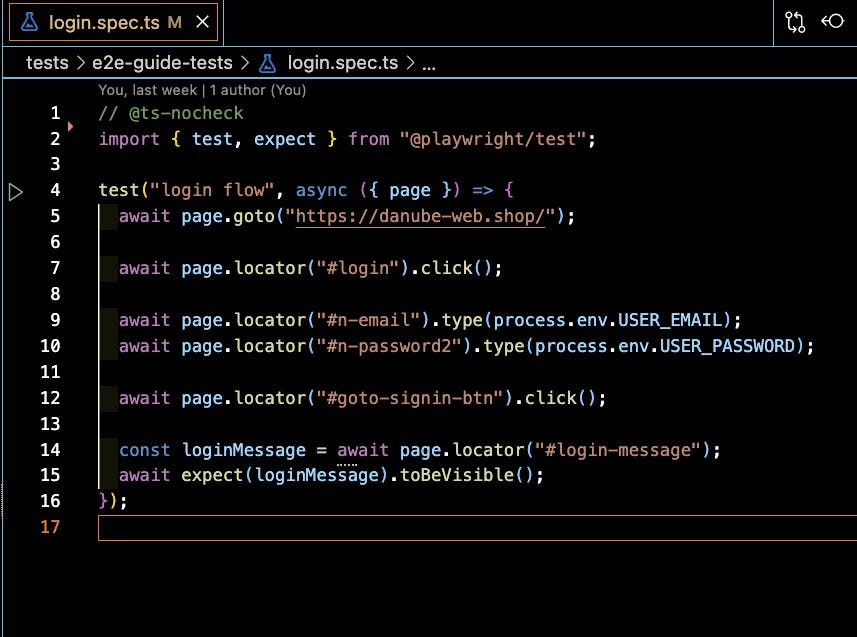

Now, let’s look at that same scenario but with a MaC workflow approach. With a MaC approach, those same tests Laura merged in staging and production are now also used to monitor staging and production immediately post-deployment. The tests ran successfully on staging and are running continuously without any issue on production until later this evening they start failing:

This means that as soon as our synthetics and APIs start failing, the team starts getting alerted in the channels they use. We’re running those checks in multiple locations, so the team sees that only some regions in the US are failing and not others. The team is also confident that Laura’s changes didn’t cause these issues, because they were tested immediately after deployment and continuously moving forward.

We’ve united testing and monitoring so the artifact gathering and time to resolution is shortened dramatically.

Our firewatch team has everything they need to inform leadership across the organization that more than likely we are seeing an AWS-related outage and that we can take some quick action.

The team can flip a feature switch that throws up a services banner and informs support how to respond to customers.

The Impact:

- This exercise lasted 30 minutes or less

- Code kept moving along

- Our customers felt like they were supported by a professional organization

- Our QA & Ops folks weren’t pointing fingers at each other

Want to learn more?

Interested in learning more about how to take your testing and monitoring to the next level? Join Checkly Head of Developer Relations Stefan Judis and I on April 13th, 2023 at 6:00pm CEST / 9:00am PT / Noon ET to learn how to unite testing and monitoring with the Checkly CLI. See you there!